SEO Custom Robots.txt

Custom Robots.txt for SEO - Robots.txt, if you haven't heard of it, is a text file located in the main folder of a blog or website that contains a number of lines of rules that apply to search engine crawlers. By writing the rule lines in the robots.txt file, you decide things like:

- Are search engine crawlers allowed to find your blog?

- Are search engine crawlers allowed to index your blog?

- Are search engine crawlers allowed to follow your blog?

- Are search engine crawlers allowed to access all your folders, files and blog pages?

- Are there certain folders, files or blog pages that search engine crawlers are not allowed to crawl?

- What is the name of your sitemap file that search engine crawlers should use to keep up with all future updates on your blog?

The questions above are closely related to the SEO of a blog so every blogger should be careful in applying certain rules in the robots.txt file. If you want your blog to be found, indexed, and followed by search engines through its crawling robots, then you must write the rules in robots.txt accordingly. Incorrectly writing the rules in the robots.txt file can cause your blog not to be found, not indexed, and not followed by search engines. Therefore, in the post How to Make a Blog in Blogger the Right Way I told you to not touching this part because it is a crucial part that beginner bloggers should no do anything on.

What’s a Robots.txt?

According to Google, A robots.txt is a file that tells search engine crawlers which URLs the crawler can access on your site.

Basically, the robots.txt file is used to restrict the access of search engine crawlers from accessing certain files or pages of a blog or website. This aims to keep a blog from being overloaded as a result of the high traffic of crawlers accessing the blog.

Without robots.txt, a blog will be found by search engines, especially if the blog owner or webmaster registers his blog or website to search engines for indexing. Using the blog's built-in robots.txt is actually enough to get your blog found by search engine crawlers.

However, certain search engine crawlers like Yandex need their "crawler name" listed in robots.txt in order to be crawled by them. It can be said that some crawlers have to be "invited" to crawl our blog and the way to invite them is via robots.txt.

Hot Article : How to Submit you Blog to Bing and Yandex

Difference between robots.txt and Meta Robots

In another post on this blog, we have explained how to set meta tags for SEO. One of the meta tags we reviewed in the post is meta robots.

Meta robots is an HTML tag that performs the same function as robots.txt. It states that meta robots is used to manage the crawling of individual pages or articles while robots.txt is used to manage the crawling of the entire blog.

However, if you are using Blogger, you cannot use meta robots on your individual articles. This is due to several factors:

- Blogger does not provide a feature to edit the header of an article. There is only one header that can be edited which is in the blogger HTML template.

- Meta tags cannot be used in <body> tags. Meanwhile, the blog article starts or is in the <body> tag.

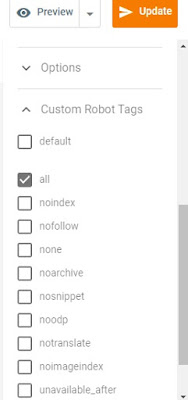

Luckily, in blogger you can set a custom robot tag in the post settings section on the right bar. That is, if you want to set the robots tag on an article on blogger, then you only need to set it from the post settings menu.

How to use the Safest Robots.txt Custom Settings for Blogger SEO

Changing the content of robots.txt means using custom robots.txt. The way to use custom robots.txt in blogger is very easy. Let us show you how to add to add robots.txt in Blogger:

- go to blogger dashboard;

- select the settings menu;

- scroll down the page until you find the crawl settings;

- enable custom robots.txt;

- click customs robots.txt;

- copy the robots.txt template that we provide below and paste it into the box provided (change the sitemap first);

- save.

Below is the the safest and SEO friendly custom robots.txt example for Blogger

Use our new SEO Robots.txt Generator for Blogger

How to set custom robots header tag in blogger for SEO

Furthermore, you can also set custom robots header tags under custom robots.txt. We will teach you how to set up SEO friendly custom robots header tags.

- enable custom robots header tags;

- click on home page tags and disable everything except all and nodp, then save;

- click on Archive and search page tags and disable everything except noindex and nodp (so search engines don't index archives and search pages containing category links), then save;

- click on post and page tags and disable everything except all and nodp (same as home page tags), then save.

Attention:

Read also: All Important HTML Tags for Blogger SEO

Every time you write an article, don't forget to set the custom header tag on the article to all and noodp. Thus, your articles will be indexed more easily and faster by search engines.

Thanks For Sharing this Informative Blog, here you will Get the Best SEO Company in India and Select the Best services, Like - SEO Services, PPC Services, Google Ads, SMO Services, etc. We have a dedicated team to providing Digital marketing services that drive sales by creating great online business for your brands, products and services.

ReplyDeleteThanks for saving me through your ideas iworkngr.info

ReplyDelete